The Series

This is the first in a series of articles on Index Covering which is a performance technique to cut down disk IO and improve query performance. The first article here will define the basic elements in a query execution plan and how to find the bad parts. The next article will show how to fix them. Finally I'll conclude with some larger examples from PeopleSoft, JD Edwards, Siebel and SAP.

Introduction

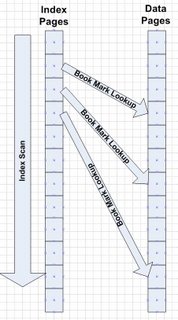

Databases have two basic structures for storing information. Index pages and data pages. Both reside on the disk. To achieve optimal performance, DBA’s create indexes which are really just organized subsets of data.

Since an index is a subset of data, extra reads are often needed to find all the additional information. Typically, this requires a bookmark lookup.

These extra reads on a disk can add up quickly and cause poor performance. The extra IOs also cause the database to use cache less efficiently.

What is a bookmark lookup?

Databases have a concept called “book mark lookups”. This means that the database has to find what it needs in the index page, then find the corresponding data page for the rest of the information required.

Think of it like this: A person needs to find George Washington’s birthday. They look in the index of an encyclopedia to find “Washington, George” which tells them to turn to page 98 to find an entry on him. On page 98, they find the birthday. This is an example of a “book mark lookup”. The researcher looks in the index, keeps their finger there, turns to a page in the book, finds the birthday for the rest of the information.

A bookmark lookup occurs when the database cannot find all the needed information for a query in the index page and so it goes to the data page to complete the request.

For example:

create table A (col_one int, col_two int, col_three int)

Go

create index A_P1 on a(col_one, col_two)

Go

[Data added to table…]

Select col_three

From A

Where col_one = ‘22’

In this example, the query would used index A_P1 to find the row pointer to the data page in the table, but since A_P1 index does not contain column col_three, it would have to do a book mark lookup from the index to the data page.

Why are bookmark lookups bad for performance?

Not all bookmark lookups are horrible. It just means that the database had to do some extra IO to find the data it needed. If you are only hitting a few rows, then it’s not an issue. If you are having to sort through hundreds or thousands of rows to find the one row you needed, or are in a massive nested loop, then book mark lookups can be a problem.

There are several examples below which will illustrate the pain in enterprise apps that can be large and small depending on the query.

How do you know you have a bookmark lookup?

A bookmark lookup shows up in the execution plan (ex. set statistics profile on) looking like this:

|--Bookmark Lookup(BOOKMARK:([Bmk1001]),OBJECT:([JDE_CRP].[CRPDTA].[F4311] AS [T1]))

Ok, so I got bookmark lookups, so what?

First, figure out if it’s causing a drag on the system. Look at the number of rows and executions that the bookmark lookup is using. Is it 5 or 50,000? This can be seen on the left hand side of the execution plan using set statistics profile on command.

In this example, zero rows were brought back and the loop was executed only once. This bookmark lookup was not a problem.

Rows Executes StmtText

------ --------- ---------------------------------------------------

0 1 |--Nested Loops(Inner Join,

0 1 |--Sort(ORDER BY:([T0].[ILUKID] DESC))

0 1 | |--Bookmark Lookup(BOOKMARK:([Bmk1000]),

0 1 | |--Index Seek(OBJECT:([JDE_CRP].[CRPDTA].[F4111].[x] AS

0 0 |--Clustered Index Seek(OBJECT:([JDE_CRP].[CRPDTA].[F4311].

Here is an example where a bookmark lookup caused severe problems. It is bringing back 85 million rows and is being executed 4,017 times.

Rows Executes StmtText

----------- ----------- ------------------------------------------------------------

1 1 |--Nested Loops(Inner Join, WHERE:([T1].[PR_POSTN_ID]=

364442 1 | |--Index Scan(OBJECT:([Siebel_Prd].[dbo].

85205956 4017 | |--Bookmark Lookup(BOOKMARK:([Bmk1001])

85205956 4017 | |--Nested Loops(Inner Join, OUTER

4017 4017 | |--Nested Loops(Inner Join, OUTER

4017 4017 | | |--Bookmark Lookup(BOOKMARK:([Bmk1002

4017 4017 | | | |--Nested Loops(Inner Join,

4017 4017 | | | | |--Bookmark Lookup(BOOKMARK:([Bmk

4017 4017 | | | | | |--Index Seek(OBJECT:([Siebel_

4017 4017 | | | | |--Index Seek(OBJECT:(

4017 4017 | | |--Clustered Index Seek(OBJECT:([Siebel

85205956 4017 | |--Index Seek(OBJECT:([Siebel_Prd].[dbo].[S_